The way "data quality" is being talked about is wrong, it misses important points about the problem of data in real world AI projects.

My view is that the largest room for improvement in model accuracy is not in the either/or of algorithm / data "quality".

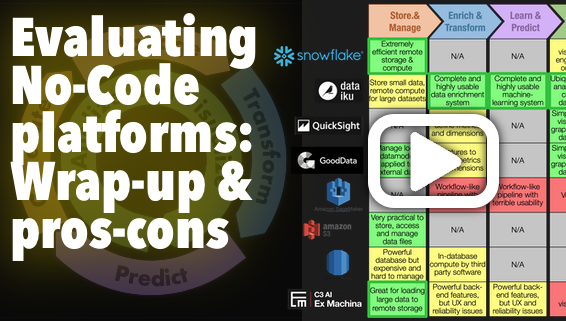

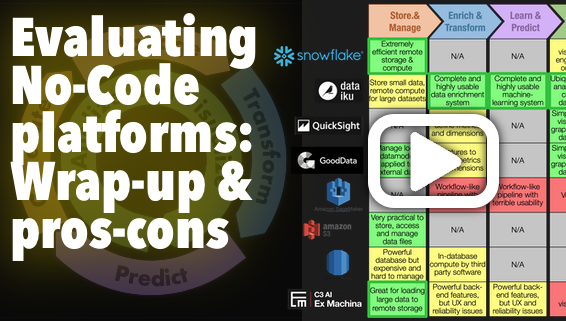

How do Dataiku, C3.ai's ExMachina, Sagemaker, Snowflake, Quicksight and Gooddata join datasets, modify/enrich data, train a machine learning model, access and store data and create visualization graphs. With videos.

Any solution to a productivity problem must address both organizational and cognitive rationality. I start my review journey into data platforms by loading a CSV to C3.ai Ex Machina and Dataiku DSS to understand how they leverage UX to meaningfully represent the data workflow.

The current discussion about the putative waning role of data scientists has to do with the technology that is available to non-data scientists.

I am starting a multi-episode review of the platforms in this space. The first question is: what are the drivers of the data and AI workflows?

AI software development is exactly like any other software development, and completely different at the same time. AI is the process that blurs the boundary between data and software. The data becomes the algorithm. It's the ultimate implementation of the vision of Alan Turing's universal machine.

Public hype about AI algorithms doing everybody's work for them, doomsday predictions of AI taking over humanity, AI is talked about as if the algorithms had agency. They don't. AI doesn't do what a lot of people pretend it does.

How do small teams in a regular business try to get value out of AI? Let's take a break from marveling at protein folding, AlphaZero and dancing robots. Let's talk about normal people trying to do AI. This is the first in a series of about 5 posts on getting started with AI projects.